All of the variables affect SEO:

- Valid Code

- Fresh Content

- Quality Content

- WordPress

- Load Speed

- AMP

- Security

- User Experience

- Colour Combinations

- Text Legibility

- Alt Tags

- XML Sitemap

- Keyword Use

- Image Optimisation

- <h1>

- <title>

- Meta Description

- Server Location

- 300 Words Minimum

- Original Content

- Readability

- Breadcrumbs

- Youtube Videos

- Well Structured Site (Silo Structure)

- Mobile Friendly

- Domain Name

- Age Of Website

SERP

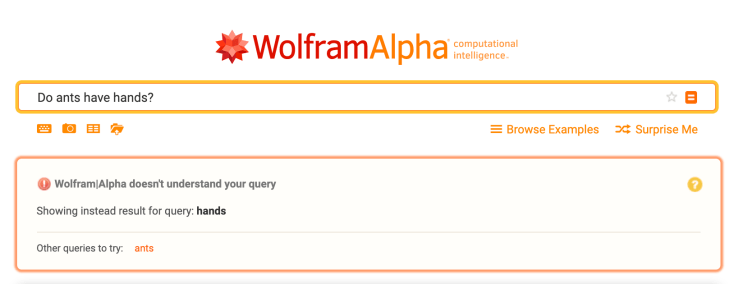

I asked 3 search engines (Google, DuckDuckGo, and Wolfram Alpha) the same question, “Do ants have hands?”, Google directed me to a page all about the anatomy of an Ant written by a biologist which was exactly what I needed and the answer I found was “Ants don’t have grasping forelegs, so they use their mandibles like human hands to hold and carry things.”. DuckDuckGo led me to a result from Yahoo answers (which can be answered by anyone) the first answer was “:D u have interesting questions u ok? umm i recon that ants have little teeny hands and 5 fingers lyk us:D” which was not a serious answer and therefore not what I was looking for. Wolfram Alpha did not have an answer for the question.

Google:

DuckDuckGo:

Wolfram Alpha:

What are Web Crawlers and how do they work?

A Web crawler is an Internet bot that systematically browses the internet, for the purpose of indexing. Web search engines and some other sites use Web crawling or spidering software to update their web content or indices of other sites’ web content. Web crawlers copy pages for processing by a search engine which indexes the downloaded pages so users can search more efficiently. Crawlers consume resources on visited systems and often visit sites without approval. Issues of schedule, load, and “politeness” come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request bots to index only parts of a website or nothing at all.

What is a sitemap?

A sitemap is a list of every web page on a website

Google Algorithms

- Panda (2011) – A search filter introduced in February 2011 meant to stop sites with poor quality content from working their way into Google’s top search results. Panda is updated from time-to-time. When this happens, sites previously hit may escape, if they’ve made the right changes. Panda may also catch sites that escaped before. A refresh also means “false positives” might get released.

- Penguin (2012) – The Penguin algorithm is a filter that sits on top of Google’s regular algorithm and attempts to catch link spam. Link spam refers to the manipulative ways that spammers and black hat SEOs create links to boost their rankings in the SERPs.

- Page Layout (2012) – The page layout algorithm update targeted websites with too many static advertisements above the fold. These ads would force users to scroll down the page to see content.

- Exact Match Domain (2012) – The intent behind this update was not to target exact match domain names exclusively, but to target sites with the following combination of spammy tactics: exact match domains that were also poor quality sites with thin content.

- Hummingbird (2013) – The Hummingbird update is designed to be faster and to better understand the context of a web page.

- Payday (2013) – Targeted spammy queries mostly associated with shady industries (including super high-interest loans and payday loans, porn, casinos, debt consolidation, and pharmaceuticals)

- Pigeon (2014) – Provides more useful, relevant and accurate local search results that are tied more closely to traditional web search ranking signals. Google stated that this new algorithm improves their distance and location ranking parameters.

- Mobilegeddon (2015)- This update provided no grey area. Your pages were either mobile-friendly, or they weren’t. There was no in-between.

- Rank Brain (2015) – Used to sort live search results to help give users the best fit to their search query.

- Possum (2016) – Diversify the local results and also prevent spam from ranking as well.

- Fred (2017) – A catchall name for any quality related algorithm update related to site quality that Google does not otherwise identify. Used to eliminate the overuse of Ads.

- Speed (2018) – Linked with AMP designed to make mobile pages faster even in an area with a poor connection.

Leave a comment